Microsoft has released a framework to guide how it builds AI systems. Titled the Microsoft Responsible AI Standard, the set of policies represents an important milestone on the path to develop better, more trustworthy AI.

The public exposure of the Responsible AI Standard is a collaborative step to share the company’s insights, invite feedback from others, and stimulate discussion about building better norms and practices around AI.

Guiding Product Development Towards more Responsible Outcomes

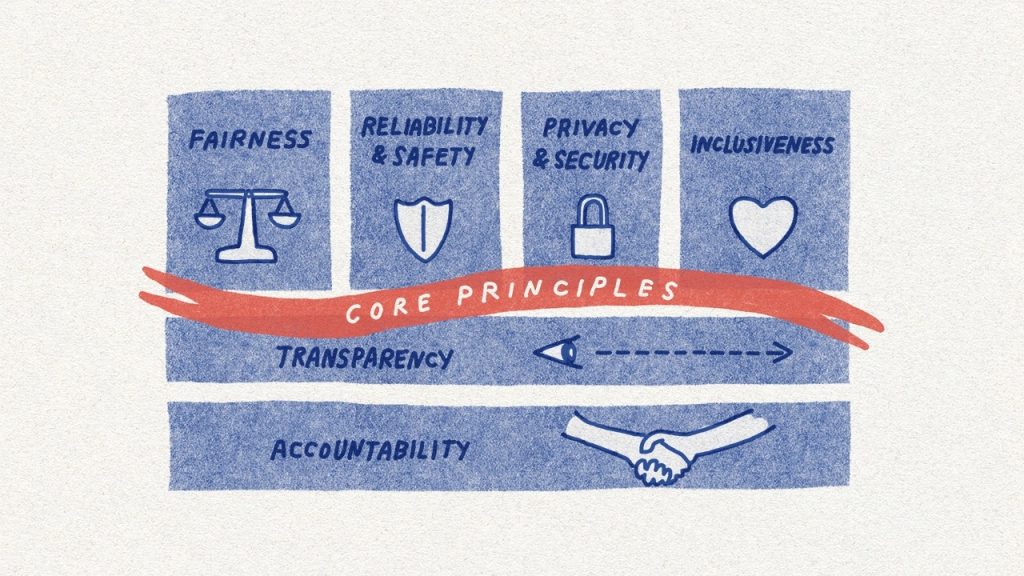

AI systems are the product of many different decisions made by those who develop and deploy them. From system purpose to how people interact with AI systems, Microsoft has declared it wants to put people and their goals at the center of system design decisions and strive to respect enduring values like fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability.

The Responsible AI Standard sets out the company’s thinking on how they will build AI systems to uphold these values and earn society’s trust. It provides specific, actionable guidance for teams that goes beyond the high-level principles that have dominated the AI landscape to date.

The Standard details concrete goals or outcomes that teams developing AI systems must strive to secure. These goals help break down a broad principle like ‘accountability’ into its key enablers, such as impact assessments, data governance, and human oversight. Each goal is then composed of a set of requirements, which are steps that teams must take to ensure that AI systems meet the goals throughout the system lifecycle. Finally, the Standard maps available tools and practices to specific requirements so that Microsoft’s teams implementing it have resources to help them succeed.

The Core Components of Microsoft’s Responsible AI Standard

The need for this type of practical guidance around AI is growing as it becomes more and more a part of our lives. Yet, laws and regulations are lagging behind. They have not caught up with AI’s unique risks or society’s needs. While Microsoft see signs that government action on AI is expanding, they also recognize a responsibility to act. As a form of self-regulation, Microsoft says it wants to work towards ensuring AI systems are responsible by design.

Refining Policy and Learning from Product Experiences

Over the course of a year, a multidisciplinary group of researchers, engineers, and policy experts crafted the second version of the Responsible AI Standard. It builds on the previous doctrine for responsible AI efforts, including the first version of the Standard that launched internally in the fall of 2019, as well as the latest research and some important lessons learned from our own product experiences.

Fairness in Speech-to-Text Technology

The potential of AI systems to exacerbate societal biases and inequities is one of the most widely recognized harms associated with these systems. In March 2020, an academic study revealed that speech-to-text technology across the tech sector produced error rates for members of some Black and African American communities that were nearly double those for white users.

Microsoft learned that its pre-release testing had not accounted satisfactorily for the rich diversity of speech across people with different backgrounds and from different regions. After the study was published, they engaged an expert sociolinguist to help better understand this diversity and sought to expand our data collection efforts to narrow the performance gap in our speech-to-text technology. In the process, they found that they needed to grapple with challenging questions about how best to collect data from communities in a way that engages them appropriately and respectfully.

The Redmond team also learned the value of bringing experts into the process early, including to better understand factors that might account for variations in system performance.

The Responsible AI Standard now records the pattern they followed to improve speech-to-text technology. As that Standard rolls out across the company, Microsoft expects the Fairness Goals and Requirements identified in it will help it get ahead of potential fairness harms.

Appropriate Use Controls for Custom Neural Voice and Facial Recognition

Azure AI’s Custom Neural Voice is another innovative Microsoft speech technology that enables the creation of a synthetic voice that sounds nearly identical to the original source. AT&T has brought this technology to life with an award-winning in-store Bugs Bunny experience, and Progressive has brought Flo’s voice to online customer interactions, among uses by many other customers. This technology has exciting potential in education, accessibility, and entertainment, and yet it is also easy to imagine how it could be used to inappropriately impersonate speakers and deceive listeners.

A review of this technology through the lens of the Responsible AI program, including the Sensitive Uses review process required by the Responsible AI Standard, led Microsoft to adopt a layered control framework: they restricted customer access to the service, ensured acceptable use cases were proactively defined and communicated through a Transparency Note and Code of Conduct. The company also established technical guardrails to help ensure the active participation of the speaker when creating a synthetic voice. Through these and other controls, the goal has been to help protect against misuse, while maintaining beneficial uses of the technology.

Building upon what was learned from Custom Neural Voice, Microsoft will apply similar controls to its facial recognition services. After a transition period for existing customers, it has decided to limit access to these services to managed customers and partners, narrowing the use cases to pre-defined acceptable ones, and leveraging technical controls engineered into the services.

Fit for Purpose and Azure Face Capabilities

For AI systems to be trustworthy, they need to be appropriate solutions to the problems they are designed to solve. As part of the effort to align the Azure Face service to the requirements of the Responsible AI Standard, the developers are also retiring capabilities that infer emotional states and identity attributes such as gender, age, smile, facial hair, hair, and makeup.

Taking emotional states as an example, the software will not provide open-ended API access to technology that can scan people’s faces and purport to infer their emotional states based on their facial expressions or movements. Experts inside and outside the company have highlighted the lack of scientific consensus on the definition of “emotions,” the challenges in how inferences generalize across use cases, regions, and demographics, and the heightened privacy concerns around this type of capability.

It was also determined that all AI systems that purport to infer people’s emotional states should be carefully analyzed, whether the systems use facial analysis or any other AI technology. The Fit for Purpose Goal and Requirements in the Responsible AI Standard are intended help make system-specific validity assessments upfront, and the sensitive Uses process helps provide nuanced guidance for high-impact use cases, grounded in science.

These real-world challenges informed the development of Microsoft’s Responsible AI Standard and demonstrate its impact on the way we design, develop, and deploy AI systems.

For those wanting to dig into the approach further, Microsoft has made available some key resources that support the Responsible AI Standard: its Impact Assessment template and guide, and a collection of Transparency Notes. Impact Assessments have proven valuable at Microsoft to ensure teams explore the impact of their AI system – including its stakeholders, intended benefits, and potential harms – in depth at the earliest design stages. Transparency Notes are a new form of documentation in which disclose to customers the capabilities and limitations of core building block technologies, so they have the knowledge necessary to make responsible deployment choices.

The Responsible AI Standard is Grounded in Core Principles

A multidisciplinary, iterative journey

The updated Responsible AI Standard reflects hundreds of inputs across Microsoft technologies, professions, and geographies. It represents a significant step forward for the practice of responsible AI because it is much more actionable and concrete: it sets out practical approaches for identifying, measuring, and mitigating harms ahead of time, and requires teams to adopt controls to secure beneficial uses and guard against misuse.

Readers can learn more about the development of Microsoft’s Responsible AI Standard in this video.

A rich and active global dialog is underway about how to create principled and actionable norms to ensure organizations develop and deploy AI responsibly. It is MIcrosoft’s belief that that industry, academia, civil society, and government need to collaborate to advance the state-of-the-art and learn from one another.

Better, more equitable futures will require new guardrails for AI. Microsoft’s Responsible AI Standard is one contribution toward this goal. [24×7]