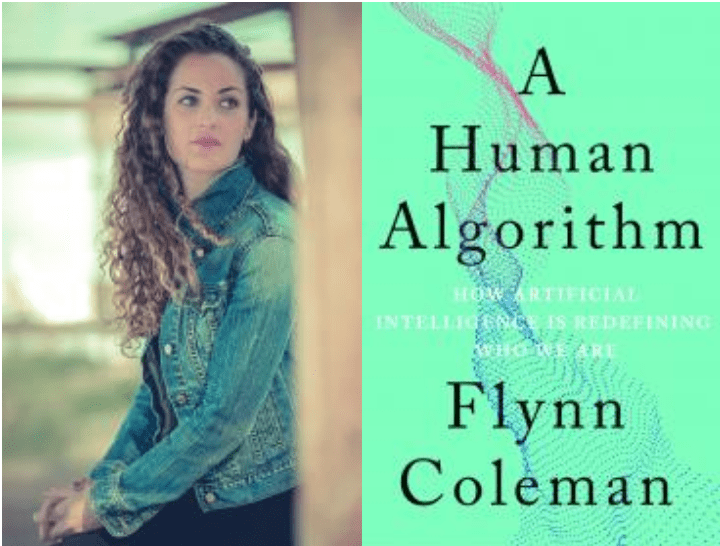

As an international human rights attorney and social innovator, Flynn Coleman has worked tirelessly to restore social justice after periods of governmental, political and economic disruption. Now she has rotated the telescope of her planetary observatory 180 degrees, from a macro view of how cultures have adopted technology to advance as civilized societies to how the new era of artificial intelligence can be navigated for the betterment of all human beings, examining both the potential pitfalls and the possibilities of AI for every global citizen. Who are we at a time when machines begin to control so much of our lives?

After graduating from Georgetown’s School of Foreign Service, the UC Berkeley School of Law and the London School of Economics and Political Science, this global crusader who has worked for the United Nations, the U.S. federal government and international corporations, turned her attention to justice in the Internet age. Flynn was the founding fellow at the Grunin Center for Law and Social Entrepreneurship out of the NYU School of Law, the first center of its kind to educate students and lawyers about the legal issues in the field of social entrepreneurship and impact investing.

It would be a mistake to assume that Coleman’s humanitarian values see artificial intelligence as something other than a force for positive, social change even while the technology hangs like the sword of Damocles over the necks of its modern facilitators and end-users.

“The twenty-first-century human rights paradigm is about to be dramatically disrupted,” she says. “Whether this is going to be a change for the better or the worse has yet to be determined.”

What is startling, even radical, about Flynn’s outlook for AI is the prescriptive she writes for making the technology more responsive and accountable. In effect, Flynn believes we need to teach AI to be “moral machines,” value-driven and self-motivated when it comes to human and robot rights.

“What if I told you that in the future, the single most important thing we can do to safeguard humanity will be to teach concepts of rights and values to machines, that the key to decoding this most ancient idea of human rights will be found in the way we choose to develop artificial intelligence or AI, and that the time to do this is now?” she asks.

Instilling AI with a code of ethics is critical according to Flynn. She believes that the intelligent machines currently being designed are the last frontier of invention and innovation in the human experience because the machines themselves will become better at inventing and innovating than their creators ever have been or ever could be.

In our current environment, we’re already being surveilled by complex forms of AI without being completely aware and using simple forms of AI on a daily basis — from Pandora to Netflix to Siri to video games, driverless cars, autonomous drones and robots that can beat us at chess or poker.

Flynn sees this rapid development as a little bit scary but also truly exciting. Her optimism is that AI could give us a chance to finally find global solutions to human rights challenges we have been unable to solve. The vital link between developing AI and to creating a more just society will be what we incorporate into our algorithms and machines so that we impart to them the best values and aspirations human culture has to offer.

“We need to start thinking hard about the values, the patterns, and the code of ethics that we will need to instill in our machines and our laws,” she advises. “Values are different than rules. Rules can be broken or followed for the wrong reason. Values are something deeper. Acting according to our values means we unearth what is beneath the rules and follow them only when we determine they are aligned with our values,” Flynn explains.

“It’s not technology we have to fear, it’s people,” the author relates.

No less a visionary than computer scientist Stuart Russell believes that the survival of our species may, in fact, depend on instilling values in AI. One of the big ideas that researchers and futurists are positing is that the most natural way to give robots values is to imprint our human stories. Storytelling is one of the keys to creating these values in robotics, taking ideas from culture – movies, poetry, literature, myths and fables – and then reverse engineering what the values are and what it means to be a moral human.

We explored these ethical issues in a deeper dive with the gifted, forward-thinking altruist.

Seattle24x7: Flynn, let’s begin with what it is that you define AI to be?

Coleman: There is no uniform definition of AI that everyone agrees on, but generally, it is understood that the “reactive” machines being developed to mimic human behavior that are in use now are known as “narrow” and “weak” forms of AI. By contrast, “general” AIs are those that are able to learn and think for themselves and thus, at least in theory, become intelligent. Artificial general intelligence, “strong AI,” or AGI, refers to a machine that has an authentic capacity to “think,” will have at least “limited memory,” and will be capable of performing most human tasks. Artificial superintelligence or “ASI” is a speculative technology that would be empathetic and self-aware, and some have suggested there should be a fourth category of AI, “conscious AI.”

Seattle24x7: An important part of your argument is for humans to put their trust in machines. Explain some ways we are already trusting AI today that once seemed impossible.

Coleman: Years ago we wouldn’t set foot in an elevator without a human operator; this idea seems quaint now. We already trust technology to help transport us around the globe, surgically repair our eyes, and manage our money. Few of us realize just how much thinking Google is already doing for us. Our definition of what constitutes true machine intelligence keeps narrowing in what’s called the AI Effect. We must build machines that we have faith in because our future existence is going to be dependent on this trust and partnership.

Seattle24x7: What rights should we, and our future robot friends, possess? The right to keep our personal data and our mental thoughts private? The right to keep our personality as it was when we were born with it? What about the right to alter our personality or our brain with these new neural advancements? Or the freedom to think and do so as we so choose, as in a right to cognitive liberty?

Coleman: As of this moment in time, international and domestic rules and standards for governing AI development are just starting to be fully weighed. I propose that prior governing instruments—such as climate change protocols, constitutional amendments, and chemical and nuclear weapons bans—can help us to draft AI rules and treaties. So too can reviewing our past responses to historical clashes between technology and ethics, looking at both our successes and failures in adopting universal rules to protect us against existential threats.

Seattle24x7: In your book, A Human Algorithm: How Artificial Intelligence Is Redefining Who We Are, you relate a story from the Seattle Aquarium where a giant Pacific octopus named Billye was given a herring-stuffed medication bottle that had a child-proof cap she had to open?

Coleman: Yes, Billye and her octopus friends had previously been served their supper in jars with lids fastened. They had quickly learned to open them and routinely did so in under a minute. But biologists wanted to see what Billye would do when her meal was secured with a childproof cap—the kind that requires us to read the label instructions and push down and turn simultaneously to open (it still sometimes takes me a few tries). Billye took the bottle and quickly determined that this was no ordinary lid. In less than fifty-five minutes she figured it out and was soon enjoying her herring. With a little practice, she got it down to five minutes.

While an octopus has a good-sized central brain, two-thirds of their neurons are in their eight arms, controlling hundreds of suckers. They use distributed intelligence to perform multiple tasks simultaneously and independently—something the human brain cannot do. Scientists at Raytheon, building robotic systems for exploring distant planets, believe that octopus intelligence is far better suited to the operational functionality they are seeking than human intelligence since the robots will require similar distributed, multifaceted intelligence.

Humans may not always be the best source for our AI models or the most accessible to replicate. Modeling the human brain, via trying to hack its 86 billion neurons, may indeed be an impossibility. As the epigram goes: “If the brain was simple enough for us to understand we’d be too stupid to understand it.” Designing AI inspired by other nonhuman life will hopefully also foster a better appreciation of their essential contributions to our ecosystem and the importance of valuing all forms of intelligence and all living things.

Seattle24x7: Predictions of net job loss by 2030 due to automation are rather ominous. How might we prepare for this economic hurricane and what role does AI play?

Coleman: Automation, initially, is likely to affect discrete activities within jobs, as opposed to replacing whole occupations, perhaps as much as 47 percent by some estimates. Gradually, however, many jobs in their entirety may fall within the competence of AI, and it will become less expensive and more convenient to use and retrain machines rather than employ human labor for many types of work.

Two Oxford researchers believe that 45 percent of total U.S. employment is at risk from computerization. An ABI Research report has predicted that one million businesses will have AI technology by 2022.

An Oxford–Yale survey of AI experts is forecasting that AI will pass human performance in translating languages by 2024, writing high school essays by 2027, working in retail by 2031, writing best-selling books by 2049, and performing all surgical procedures by 2053.

AI works at higher speed and quality, with greater efficiency, and at a much lower cost than humans. AI can be updated and renewed easily. Moreover, AI makes decisions devoid of impulse and emotion. We are woefully unprepared for the coming automation tsunami.

One reason is that our current thinking about the economy and employment is outdated. It has been outpaced by our technological achievements and by what we understand about human behavior. Establishing new concepts of work for a new era is exigent. The old models are not sufficient to prevent human workers from becoming obsolete.

Seattle24x7: What might this new, idealistic, post-automation world look like?

Coleman: With wealth and resources distributed more equitably, and with machines assuming much of the work, humans will be defined less by what we do, and more by who we are. When we have fewer banal tasks to do, and thus more time, we can choose to improve not just our own lives, but the lives of many. We can reimagine our relationship with work and labor and tap into our innate sagacity. AI can lead us to the next step in the Cognitive Revolution. To do this, we have to become more curious and creative.

Seattle24x7: The 2018 U.S. Congressional Facebook hearings demonstrated how little our technology scions and government officials understand each other. How do you hold out hope that the government will possess the will to regulate artificial intelligence?

Coleman: To better manage the looming challenges posed by developing smart technologies, we need to invite the largest possible spectrum of thought into the room. The STEM fields have historically been homogeneous, with relatively few women, people of color, people of different abilities, and people of different socioeconomic backgrounds. To take one example, 70 percent of computer science majors at Stanford are male.

When attending a Recode conference on the impact of digital technology, Microsoft researcher Margaret Mitchell, looking out at the attendees, observed “a sea of dudes.” This lack of representation poses many problems, and one is that the biases of this homogeneous group will become ingrained in the technology it creates.

There are, fortunately, individuals and organizations who are encouraging multifarious groups of people to pursue careers in technology, including Women in Machine Learning (WiML), Black in AI, Lesbians Who Tech, Trans Code, Girls Who Code, Black Girls Code, and Diversity AI, all of whom are trying very hard to help poorly represented groups break into the industry.

Although the tech world is replete with brilliant specialists, the form of genius best suited and most applicable to our Intelligent Machine Age may be combinatorial creativity. Combinatory creativity, or as Einstein called it, “combinatory play,” is connecting the dots of different ideas to create something new and revolutionary. It’s not simply about raw intellectual ability, but about combining ideas in interesting and surprising ways and reformulating them into new concepts.

We also need people with lived experience of social justice issues to be part of the conversation about the future of technology.

Seattle24x7: Algorithmic technology concealed within “black boxes” also infiltrates our justice and police systems. Many AI systems currently in use are deeply biased —for instance, judicial sentencing decisions using these kinds of programs have been shown to exhibit bias against Black people. Law enforcement employing AI systems already has a face recognition network of 117 million Americans, and the technology has measurably increased unfairness and injustice, particularly against African Americans.

Coleman: In an Orwellian scenario come true, certain AI-instructed law enforcement operating systems include algorithms that “predict” whether someone will commit a crime. “Pre-crime” AI is now being deployed by police, often targeting innocent people who can, by simple association with someone who has committed a crime, or by living in a poor neighborhood, have their fortunes predetermined.

Police departments from Chicago to New Orleans to the United Kingdom that are using AI to compile lists of potential criminals and predictive policing to forecast future crime are failing to accurately identify people at all if they have black skin.

Technology is not born with cognitive bias; it inherits it from those who code, program and design it. If we want our rapidly advancing AI to be ethical, we need to commit to improving our own internal algorithms. This involves identifying the core principles we want to live by, accepting that we have system flaws, ferreting out and repairing the bugs, monitoring the ways our systems interact with those of other beings, and upgrading our human features to align more and more with who we aspire to be.

Seattle2x7: This is an intensely hopeful, positive book about how humans could thrive by embracing AI. What’s one thing you’d like people to know about what our future with AI could be like?

Coleman: What I would like people to know is that it’s still up to us as of this moment to act collectively for our common good. Yes, there are some terrifying things that could happen. But there are also many beautiful possibilities. Smart tech can help unburden our mental lives, giving our imaginations room to roam and us more time to connect with ourselves and others. Intelligent machines can be powerful tools to support our well-being and help us move into a more curious, creative, and peaceful existence. What might we all do with a life that has more time for dreaming, inventing and exploring?

Seattle24x7: Who is the audience for A Human Algorithm?

Coleman: I wrote this book to be accessible to a wide audience and it emanates from a broad, human perspective. I believe that people already invested in the science and technology world will gain new insights and that the topic should also be approachable for readers who may have been intimidated about going deeper into the subject.

Seattle24x7: Would you consider A Human Algorithm to be a philosophical book?

Coleman: Yes, very much so. It’s also a historical, scientific, political, and spiritual book. [24×7]